In the computing world, when we are looking at performance improvement for quick wins, we first look into caching. Caching stores frequently accessed data in a fast and convenient way, reducing the load on your database. It is helpful to access more frequent data quickly and to avoid any additional computation that was done to fetch and store previous data. Caching stores the data for a small duration of time and turns slower operations into faster performance.

Caching is largely divided into two types: a local cache and external cache. A local cache uses the JVM heap for storage, and a remote (or cluster) cache uses in-memory stores such as Redis or Memcached.

Types of Caching Strategies and Their Challenges

Local Cache:

A local cache is easier to implement by having some sort of storage within the service (say, a Hashmap) but leads to a cache coherence problem. That is, being a local cache, it will be different per server and lead to inconsistent data.

External Caches:

External caches stores the cached data in a separate fleet, for example using Memcached or Redis. Cache consistency issues are reduced because the external cache holds the value used by all servers in the fleet. One point here to be noted is there might be failure case when updating the cache. Overall load on downstream services is reduced. Cold start issues during events like deployments don't come as the external cache remains populated throughout the deployment.

External caches provide more available storage space than in-memory caches, reducing occurrences of cache eviction due to space constraints. the first is an increased overall system complexity and operational load, since there is an additional fleet to monitor, manage, and scale. The availability characteristics of the cache fleet will be different from the dependent service it is acting as a cache for. The cache fleet can often be less available, for example, if it doesn’t have support for zero-downtime upgrades.

The way to build a cache:

A) Client side caching: is a web-caching process that temporarily stores the copy of a web page in the browser memory instead of the cache memory in the server. This browser memory is located on the user’s device. So, when a user visits a website enabled with client-side caching, the browser keeps a copy of the webpage. It is fast because of no network call, no real time data, best way to use when you want one time client side data to build your application

B) Server side caching: At the user’s first request of a web page represents their first visit, the website will process the normal requesting the information from the server. After making this request and sending a response back to the user, the server saves a copy of the regenerating new content from its database. This process helps avoid repeatedly making expensive database operations to serve up the same content to many different clients. For websites with no dynamic information, server-side is the best option. server caching avoids servers from getting overloaded, reducing the work to be done, and improves the page delivery speed

1.) Pre build cache:

Pre-caching is a technique used to proactively store or cache data in anticipation of future requests. The idea is to cache commonly accessed data or resources in advance so that any request comes, you can deliver it to the end-user faster.

The process of fetching data from database then we pre process data and build cache if there is large data to build cache, it will take time to build cache, and meanwhile if any request comes to server it goes to database, because the cache is not consistent as the data is not completely defined. if any modification request (no real time data requires) comes it takes time to update data in cache. If get request comes we cannot receive updated data immediately as the scheduler/cron process would update the data in cache.

2.) Runtime cache:

When the request comes to application first check data is exist in the cache or not. if the data is not available, its pulled from database and return back to the response and simultaneously writing the data in the cache. So when the next request comes, the data will always be fetch from cache. As compared to results of first request, the next request would give response faster than the first request. If any write/update request comes, it is directly updated in the database and remove simultaneously from cache. After that any new get request comes the same process gets followed.

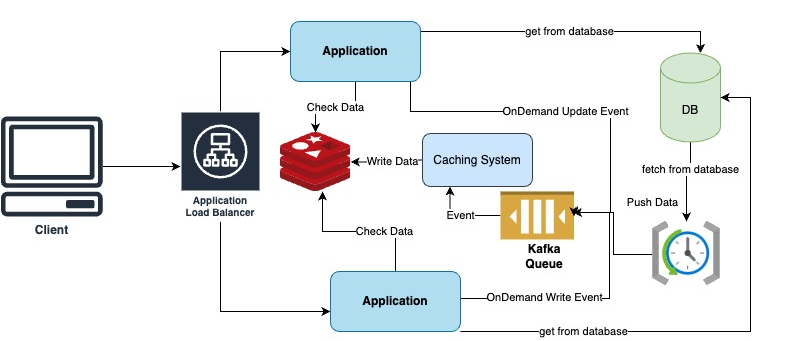

Inconsistency between database and cache can be observed If you want that your distributed system is consistent and available, you can use combination of both to building a cache technique.

Conclusion:

In this article, we looked at what is caching and why it has become increasingly important. Also discussed possible threats and risks comes with caching. Implementing a properly defined caching system requires time and experience. This is why knowing the most important types of cache is required to design the right system which I aimed to expalin here.

Thanks for reading! I hope that you found this article helpful. Let me know if you have any suggestions.

Comments

Post a Comment